A straight-to-the-point approach to SEO that will teach you the basics you need to know.

Search engines like Google can be the starting point of new marketing ideas and profitable customer-business relationships.

As consumers, when we are looking for a business or organization that can provide solutions to our current pain point, we go to Google. On average, 3.5 billion searches are conducted on this popular search engine each day.

Therefore, if you want potential customers to find you online, you have to invest in search engine optimization (SEO).

When it comes to SEO, many business owners struggle to create and implement a strategy that will help their website rank. Others don't know where to start.

To ensure your website is on the right track, we have created a comprehensive beginner's guide to SEO. In it, we highlight and explain the fundamentals you need to drive your website's search engine rankings.

SEO, short for search engine optimization, stands at the forefront of all digital marketing efforts. For all marketers of B2B companies, it is essential to understand what’s at the core of all SEO efforts and the key components you need to follow to build a reliable and effective SEO strategy.

While there is no single definition, put simply, SEO is the practice of increasing the quantity and quality of web traffic to your business website through organic search results from search engines.

Optimizing your site is an ongoing process that ensures Google and other search engines can index and properly read every webpage on it. Doing so allows sites and pages to rank for desktop, mobile, and voice-activated search queries. The cleaner and more optimized your website and your pages look influences the web traffic and the quality of that traffic it receives.

For a successful B2B SEO strategy, a website’s content must provide quality information that answers users’ questions and helps them solve their pain points. It is equally important that the material is easy-to-read, so consumers spend more time on a website. Quality content should always be written with the user in mind and should be structured in such a way that search engines can readily understand what it is about and how it relates to other sections on your site.

For example, if you are a construction business, you do not want Google or other users to think you’re an architecture firm. What you want is to attract visitors who are interested in the products and services you can offer—appealing to your target audience impacts the quality of traffic your site receives and increases the chances that a visitor is interested in becoming a paying customer.

When you identify the audience you want to attract, you’re able to reel them in. Understanding your audience’s profile includes knowing:

In its purest form, SEO is all about providing search engine users with the highest level of value. If you’re aware of what an average customer wants and needs, and you’re able to deliver, your SEO efforts will result in higher levels of success.

In the following chapters, we’ll walk you through all the SEO basics you need to know to get started with search engine optimization.

Delivering value to customers is only a piece of the SEO pie, though it’s the most critical piece of the pie. However, you can create the most powerful and meaningful content for your customers, but if search engines are unable to crawl and index your site, you might as well have never produced the content because no one will be able to find it.

More than ever before, users want quality answers to their questions, fast. The quicker your site can answer a user’s pressing questions, the more likely they are to revisit your website later on.

The key to creating content that ranks among the top search results for search engines is understanding the way Google finds, analyzes, and ranks your content.

Content ranks primarily using two methods:

If the content is not relevant, it has little chance of ranking even if it is authoritative. If your website is not trustworthy, it has a small chance of ranking well on SERPS, no matter how relevant to your topic it is.

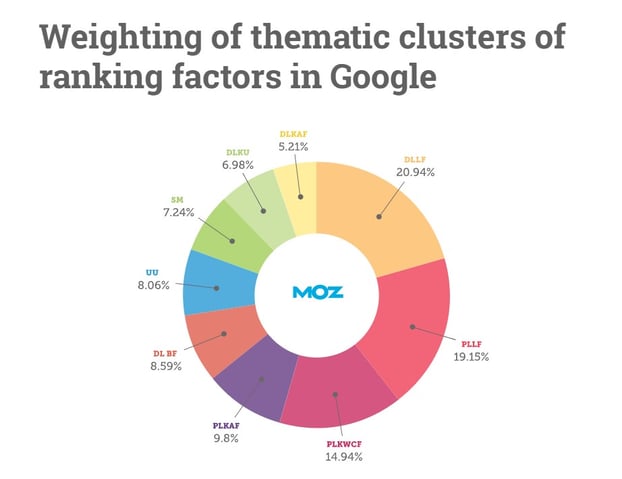

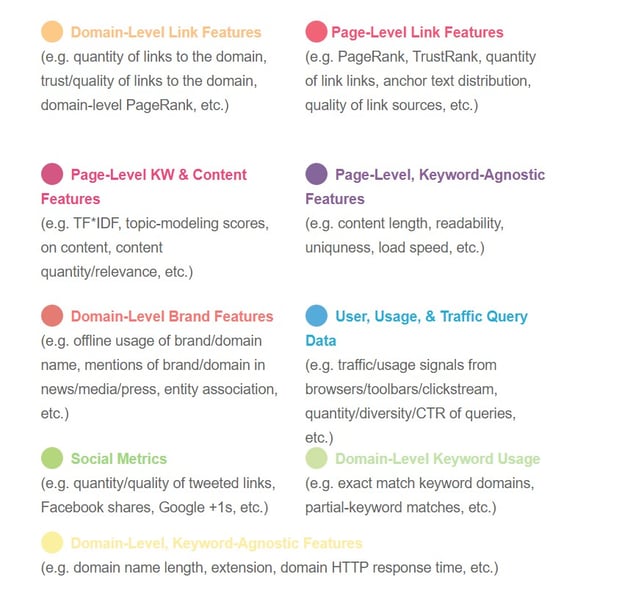

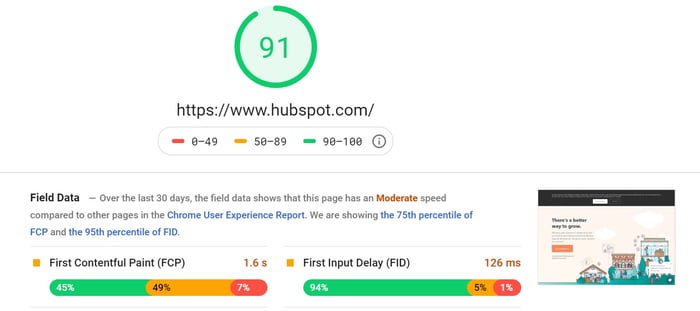

To build an efficient SEO-friendly website, it helps to understand the way search engine bots select the webpages featured on SERPS. Google’s search engines use over 200 variables to match keyword terms with the content that users are given. HubSpot provides an entertaining way of breaking down these factors.

Every business that aims for an SEO-friendly website must learn certain technicalities to enable them to work alongside Google bots and achieve top SEO results. Without this technical knowledge, it becomes increasingly difficult to compete with other businesses that are adapting.

An HTTP response code that indicates your requested page has permanently moved to a different URL.

An HTTP response code that indicates your requested page has temporarily moved to a different URL.

A status code used when a user does not have the necessary credentials to access the page.

A status code signaling that a user may not have the required permissions to access a page or may need an account to access it.

A status code used when a requested page couldn’t be found but may be available in the future.

A server status code that tells Google your site is temporarily down for maintenance and will be back online soon.

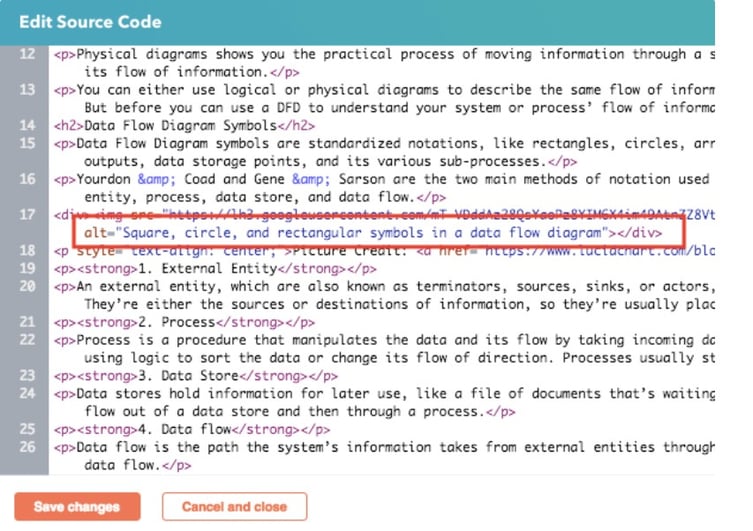

Alt-text is the text in HTML code that describes the images on webpages. You can learn how to write practical alt text here.

The words that make up a hyperlink and link to another webpage or document. They typically appear as underlined blue text.

Also known as “inbound links,” they are links from other websites that pinpoint back to your site.

SEO practices that violate Google’s quality guidelines.

An email standard that uses brand logos as an indicator of email authenticity and helps consumers avoid fraudulent emails.

An image or line of text that persuades a reader to take the desired action.

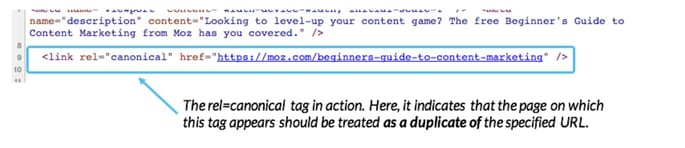

A piece of HTML code that lets search engines know that one URL is the original version of another. It is often represented as rel=”canonical.”

The code that makes a website look a certain way; this includes fonts and colors.

A spam tool that helps determine whether a user is human or not.

A software that allows users to publish, edit, and modify webpages within a website.

A metric that estimates the percentage of consumers who take the desired action. The desired action can differ from campaigns and can range from watching a video to visiting a webpage or buying a product.

The process of changing marketing strategies, ads, and websites to increase conversion rates.

A program run by search engines that scours the Internet for web content. Crawlers are also known as search engine bots or spiders.

How a client feels was their overall experience with your business, this includes what they think about your brand.

A tech platform that is used for collecting and managing data from various sources.

The percentage of all the backlinks on your website that point to other pages other than your homepage.

An information system that translates a domain name such as “cnn.com” into IP addresses so browsers can load Internet resources.

A search engine ranking score that predicts how well a website will rank on search engine results pages (SERPS). It is also known as domain authority and was created by Moz, an SEO software company.

A protocol often used for email validation, policy, and reporting. It aims to prevent email spoofing and fraudulent emails that take advantage of a recipient by contacting them through a forged sender address.

An email authentication method that allows recipients to verify if an email was legitimately sent and authorized using a business’s domain. This authorization takes the form of an email with a digital signature.

A markup language that is similar to HTML and allows users to modify their business Facebook profile.

An HTML version of a web page that Google creates and stores after it is indexed.

A web analytics platform developed by Google that allows users to track and report their website's traffic.

A Google search feature that retrieves content-based images for a search query.

A Google service that helps users monitor, maintain, and troubleshoot their website’s appearance in Google search results.

A tool that allows webmasters to manage and arrange any marketing tags (known as snippets of code or tracking pixels) on their website or mobile app without having to modify any code.

A Google platform that evaluates and alerts webmasters of issues with their website and provides solutions to fix them.

A standard markup language used to create webpages.

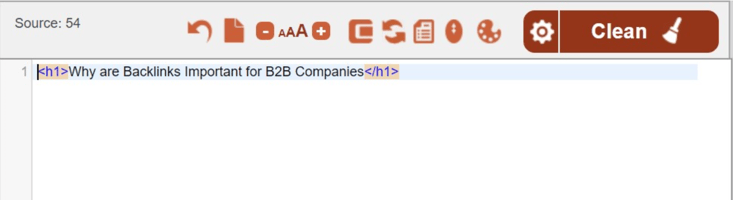

Tags meant to define headings in HTML and range from H1 (the most important) to H6 (the least important).

An HTML code that's used to create a link to another page.

A communications system used to connect to web servers on the Internet or to local networks (intranet). Its primary function is to establish a connection between a server and send HTML pages to the user’s browser.

An encrypted version of HTTP.

The process of searching for keywords relevant to your website and determining which of these can yield the highest ROI. These keywords should answer: What are people searching? How many people are searching for it? Where do they search for this information?

A form of off-page SEO in which your website earns links from other sites that direct readers to your own.

The process of improving aspects of a landing page to increase conversions.

The practice of earning hyperlinks that link from other websites to your own.

An SEO strategy that's used to increase the visibility of local businesses on search engines.

Snippets of text visible on a webpage’s source code that tell search engines what the page is about.

The practice of highlighting the important elements of content on a webpage to help it stand out in SERPS.

A code language that's designed to provide crawlers with information on a website's contents.

Essential business information that should be available online for the public to see. An up-to-date NAP will help organizations rank better on local organic search results.

Backlinks that happen organically. They are used to refer to a credible website, a piece of content, or a source.

A link building strategy in which a site gets inbound links from other domains without linking back to them.

The practice of improving the aspect of a webpage to achieve a higher ranking on search results and earn quality website traffic.

A backlink that redirects to a webpage on another site.

A score developed by Moz that predicts how well a specific webpage is likely to perform on search engine results pages (SERPS).

A view on a page. Google Analytics attaches a tracking code to page views to help SEO specialists identify the webpages that receive the most traffic.

A metric used by Google to determine the relevance of a webpage.

When using backlinks, the RD is the original domain that people came from before visiting your website.

Pre-defined tags of semantic vocabulary that you can add to your HTML to improve how search engines read your webpage on SERPS.

The strategy of increasing the quality and the quantity of web traffic on your site by improving its rankings on search engines.

The rank of a webpage on an organic search results page (SERP).

The position a webpage ranks for on a SERP.

Webpages presented to users after they have searched for something online using a search engine.

An email authentication method that's used to prevent spammers from sending messages to others using your domain name.

Protocols for establishing authenticated and encrypted links between networked computers.

A measure of how topically relevant the backlinks to your website are.

The name of a web address.

An analytics tool used by marketers to track the impact of their online efforts, understand their audience’s behavior and measure performance.

It includes every aspect of a user’s experience with a company and its services. In SEO, UX refers to a user’s experience while navigating a website.

The use of optimization strategies and techniques that focus on the human experience and follow search engine policies and regulations.

Any webpage that’s created to improve search engine rankings by violating Google’s Webmaster Guidelines but doesn’t provide any value to readers.

When you conduct a search query, it is useful to understand the process that gives you the SERPs you receive. Understanding how search engines retrieve the results they display for users will help you to know how you can design content on your page to improve your site’s rankings. With this, your webpages can hopefully show up when someone searches for a service you provide.

A business’s online success largely depends on the construction of an SEO-friendly website. Ranking in the top SERP is important for clicks; 75% of search engine users do not scroll past the first page, ever. So it is pretty evident that if you’re not featuring on page one, most users will not know your webpage exists.

The key to SEO success is to facilitate a search engine crawler’s job of scanning your content and categorizing it accordingly. This way, when a prospective customer is looking for information you provide, Google has every reason to rank your webpages in the top results.

Businesses should integrate a variety of different elements to achieve optimum SEO results. We’ve categorized these elements into the four major types of SEO every company should be implementing. These are on-page SEO, off-page SEO, Technical SEO, and Local SEO, which we’ll be covering in the following chapters.

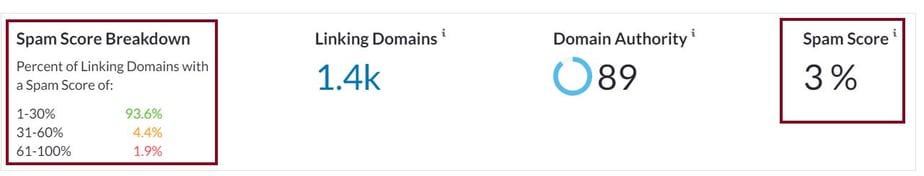

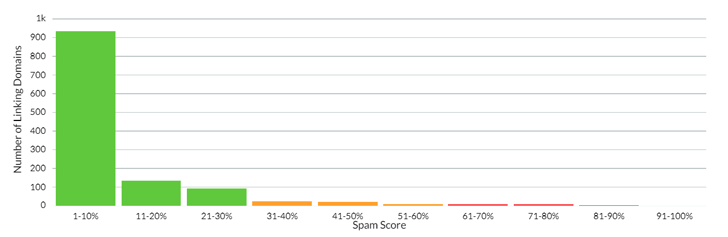

(Source: Moz)

(Source: Moz)

While countless factors influence web page ranking on Google, some carry more weight than others. To become an SEO powerhouse, consider the following when devising your SEO strategy.

On-page SEO refers to everything a user can see on a webpage. It includes strategies businesses can use to optimize an individual page on their site.

Every element that contributes to on-page SEO should work together so that search engines understand the content of a page, identify if the content is relevant to a search query, consider the site as a valuable source worthy of displaying on SERPS, and create a good user experience. Includes every aspect of a user’s experience with a company and its services. In SEO, UX refers to a user’s experience while navigating a website.

Putting in the time and effort to developing your on-page SEO brings you closer to higher site traffic and an increase in your overall web presence.

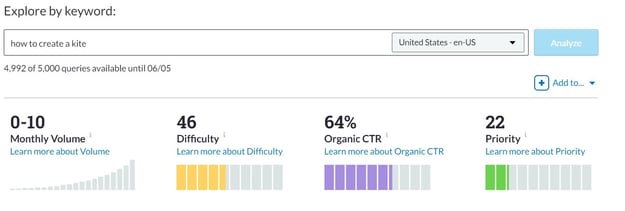

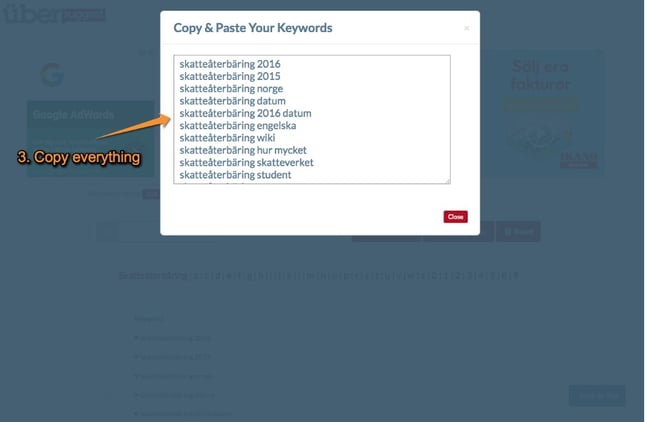

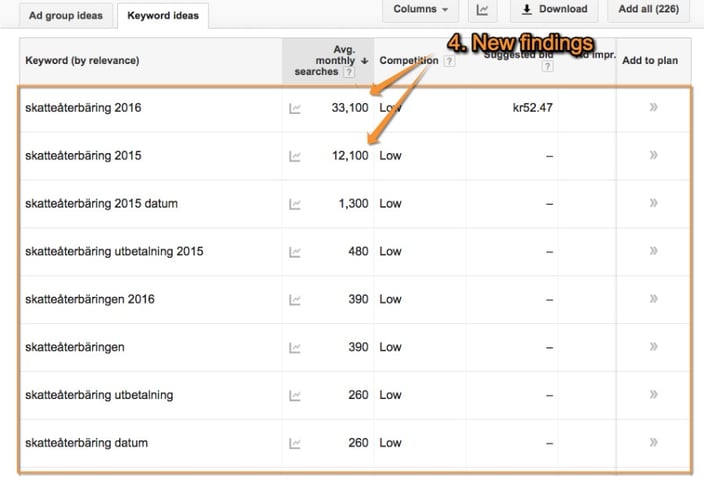

Before publishing a webpage worthy of high rankings, you need to invest some time in keyword research. During this process, a digital marketer looks for sets of keywords that mirror what their site’s target audience is likely to be using.

Keyword research has become an integral step in the content creation process. It often dictates the various topics that new content will cover.

In other words, it is not enough to provide users with the right answers. As websites get better at giving useful information, how a site presents its content becomes a significant tiebreaker for ranking under more competitive keywords.

Remember, the goal of SEO is to get your website’s content indexed by Google and found by your target audience. A whopping 75% of people do not scroll past the first page of a SERP. This fact makes it ever so important that you target a majority of the keywords your target audience is likely to use.

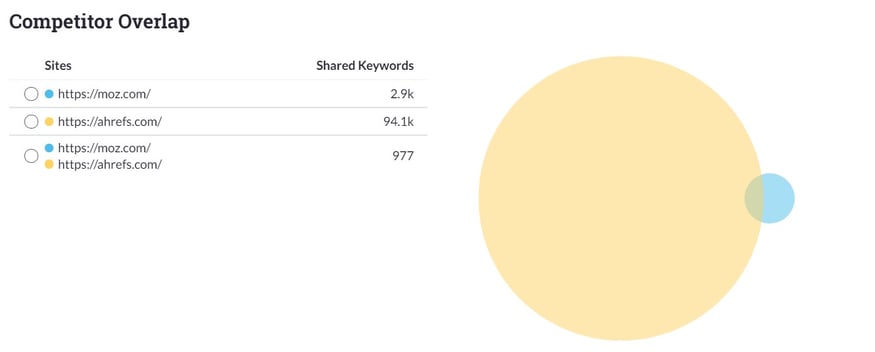

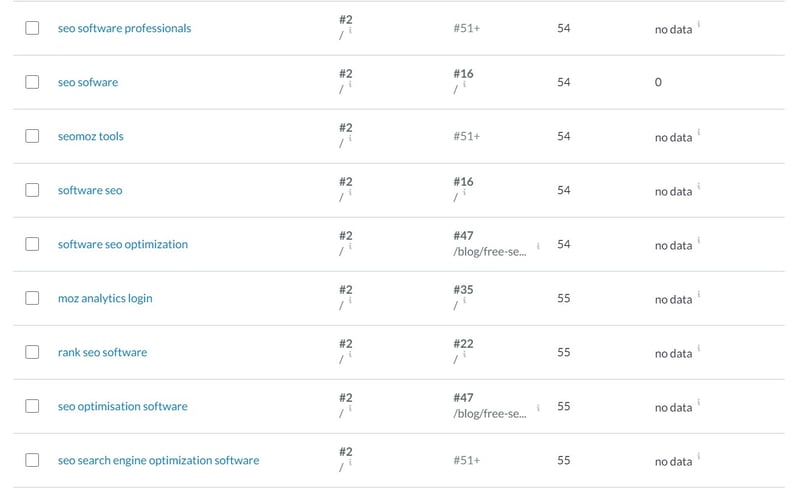

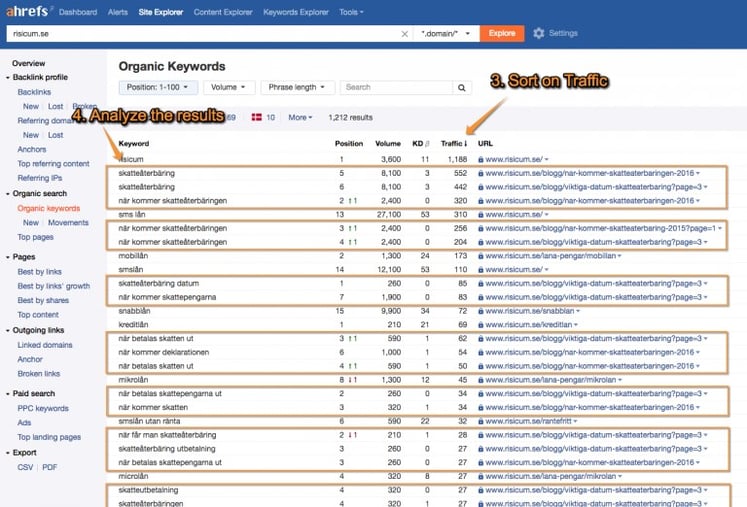

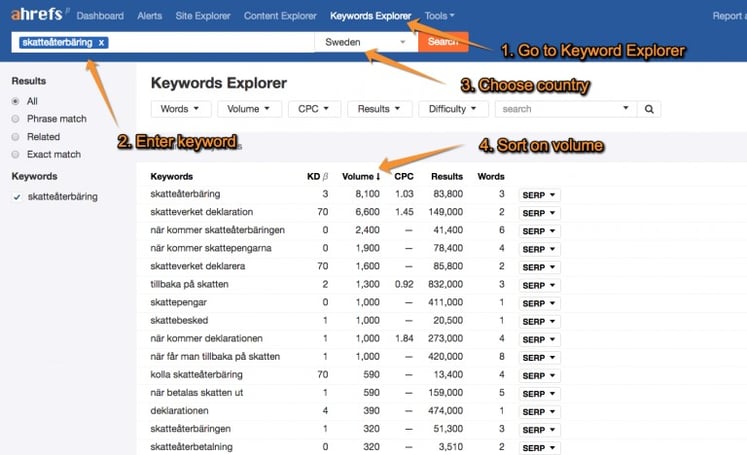

Tools like Moz Keyword Research Tool, Ahrefs Keywords Explorer, and Answer the Public help businesses determine the keywords they want to target for more significant SERP results. These tools do their best to break down the Google code and understand which search results are shown to users.

They work well in helping you develop an understanding of what words people are searching for and how much competition there is for those specific words. These tools will also provide you with other similar words and phrases that people may search for that you can target. If you’re feeling a little lost, tools like Moz Keyword Research Tool are excellent aids for uncovering a searcher's intent and determining the problems they are trying to solve.

When choosing the right keywords from which to build your new content, investing time to discover the ones your direct competitors use can help you build a better SEO-enhanced content strategy. Doing so can work to your advantage if you create content that can take some of their traffic as well as expose potential gaps in their content.

To help in this endeavor, you can opt to use a data management platformA tech platform that is used for collecting and managing data from various sources.. DMPs like HubSpot, Google BigQuery, and Amazon Redshift help businesses understand their customer’s demographic and psychographic information better so that they can draw in their target audiences and persuade them to purchase their products or services.

When it comes to keyword research, DMPs can help businesses combine the information they have about their buyer personas to brainstorm better keywords that would best align with their different personas.

If you’d like more information on performing keyword research, you can consult Moz’s in-depth guide.

If you want to rank higher and see an increase in your web traffic, it is crucial to understand what readers want and why they are searching for it.

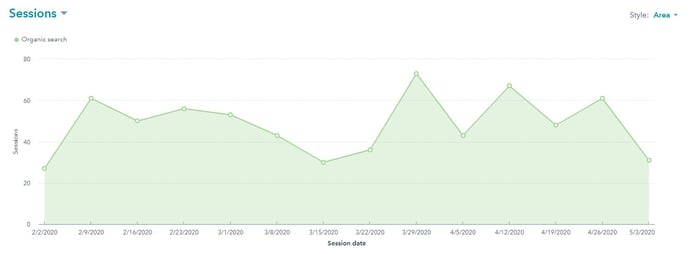

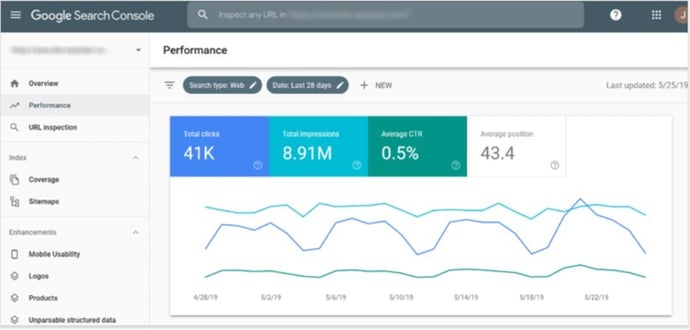

Google Analytics and Google Search Console give businesses insight into the user intent behind search queries. Google Search Console helps companies identify the typical search terms that people use to get to their site, as well as those terms that do not drive much web traffic.

Once a person lands on your website, Google Analytics helps businesses identify the content that users engage with the most and how long they engage with it. Keep in mind, t that the information this platform provides is a generalized overview of all their site visitors and cannot be broken down to track specific users.

As a common rule, search queries typically fall within one of four user intents:

Building webpages that target a specific search intent and use relevant keyword phrases increases its chances of showing up on a search engine results page (SERP).

The Internet is jam-packed with new information every day, an average of 380 new websites are created every minute and 500 hours of video are uploaded to YouTube every minute. For sites working hard to make their content visible, the quality of the information in the form of text, blog posts, graphics, infographics, and videos they are producing needs to be top-notch.

When deciding how to rank a webpage against others that address similar topics, Google bots will rank webpages based on the answer to these questions:

How many times does this page use keywords from the search query?

Is this page high quality or low quality?

For a webpage to successfully rank on page one, its content must use the targeted keywords and keyword phrases in a way that respects the natural flow of ideas. Practices like keyword stuffing are prohibited and considered a black hatSEO practices that violate Google’s quality guidelines. activity.

To help readers identify the main points within a piece of text, you can choose to highlight, bold, or use italics to emphasize parts of your writing. Use header tags, title tags, and alt text to help Google index your content better.

Considering Google’s top priority of providing users with great content that: 1) serves a purpose and 2) can be of service to someone, everything on your site must fulfill these criteria.

Websites that provide quality content receive a boost in their page rankA metric used by Google to determine the relevance of a webpage. and those that create low-quality content (per Google standards) are not rewarded and receive less online visibility.

What criteria does Google use to determine what is an excellent, a good, and a bad piece of content? The answer lies in the Search Quality Evaluator Guideline in which the search engine giant outlines the three olden principles to how it evaluates web pages determines the quality of its information.

These three principles are:

The beneficial purpose emphasizes that every webpage must have a user-intended purpose, which can range from providing information, to entertain others, or to sell them something. However, a website cannot be created with the sole intention of making money without giving any value to others.

Under this principle, all web content needs to possess the following qualities.

Expertise: The person behind every piece of content must have a certain level of knowledge on the topic at hand. Whether they have the credentials to support their expertise or real-life experience, the information they publish on the Internet must be legitimate and correct.

Authoritativeness: Every webpage and the business behind it should have a level of authority in their field. For this reason, establishing domain authorityand good high-quality backlinksAlso known as “inbound links”, they are links from other websites that pinpoint back to your site. is essential. Every company should aim to create content that is interesting and authoritative enough to attract links from other websites.

Trustworthiness: Users should be able to trust that the content, the site, and the business behind it provide correct and accurate information about the topics under discussion.

This principle addresses the type of content that, if misrepresented, can directly affect a user’s health, safety, or financial stability. For this reason, every website that publishes content under any of the following industries has a clear understanding of Google’s quality expectations.

While Google’s quality standards may slightly change over time to adjust to the ever-changing digital landscape, its emphasis on providing the highest of qualities for the content it ranks first will always stay the same.

There is often a fine line between the right and wrong way to write content that is sufficiently long, relevant, engaging, and backed by reliable sources. When brainstorming a content piece, you should consider the information you want to include to follow the standard 2,100-2,400 word count that is the norm for good content today.

We know the importance of targeting keywords on a webpage. When a user searches for a string of keywords, a search engine will retrieve results that are high quality, authoritative, and relevant to the search query.

However, as queries have become more and more complex, merely targeting specific keywords on a blog post or article is not enough. People today are comfortable asking complicated questions, and they want accurate search results.

To stay on top of this growing trend, search engines have been become smarter at identifying the connections between different keyword searches. Search engines understand the topical contextA measure of how topically relevant the backlinks to your website are. behind a user’s query so they can relate one person’s keyword search with those of others it has had to solve in the past. For example, if you search for “1980’s song whose music video included Michelle Pfeiffer”, Google will retrieve many relevant results to this query using the past search results from other users.

For this reason, today’s websites need to be focusing on building content across topics and NOT for specific keywords. You may be asking, so what’s the difference between a keyword and a topic to search engines? A keyword is a word or phrase used to perform a search.

To start creating content with topical relevance, you need to identify which topics you want your site to be known associated with. For example, if you provide SaaS to other businesses, then you will want to create content that answers any possible question that your customer base is likely to have about that subject matter.

Doing so will generate more relevant web traffic to your site, increase your chances of ranking on the first page for searches related to your target topics, and grow your site’s authority in that area.

To help with this endeavor, you can use data management platforms to merge any data your customers and prospects have provided with data from third parties. Information such as demographics, geographical location, lifestyle choices, and purchase behavior can be used to have a greater understanding of the interests, wants, and needs of your target audience. You can use this knowledge to craft more relevant and persuasive content that can help convert your audience.

The best advice anyone can give you on succeeding at SEO is to focus on the user (your target audience), and not on search engine algorithms.

Algorithms can be reprogrammed, but your target audience will always want one thing: quality information that answers their questions. By focusing efforts to optimize your business website for SEO results now, adjusting your SEO strategy to mirror Google algorithm updates may not be so bad.

Keeping a tab on these changes is very important not only for your SEO game but also for your site’s reputation. Operating a website that does not follow general SEO guidelines may be a cause for penalties such as lower rankings or manual penalties.

In some cases, a site can receive a penalty for over-optimization because Google sees this as a way of trying to cheat the system through the use of black hat tactics. Over optimizing a site can include adding webspam, creating unnatural links, and keyword stuffing.

Google has taken a focused approach toward addressing these practices by introducing updates like the Penguin and Panda algorithm changes that aim to penalize these sites.

We know Google wants quality websites and quality content, but what does quality mean?

Quality refers to in-depth coverage of a topic. The key to quality is providing real value to users in an informative and entertaining way.

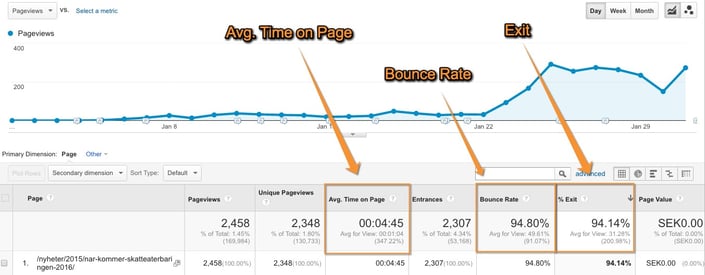

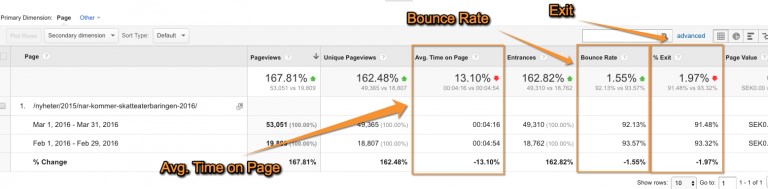

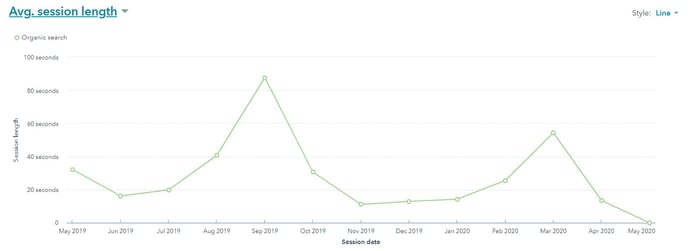

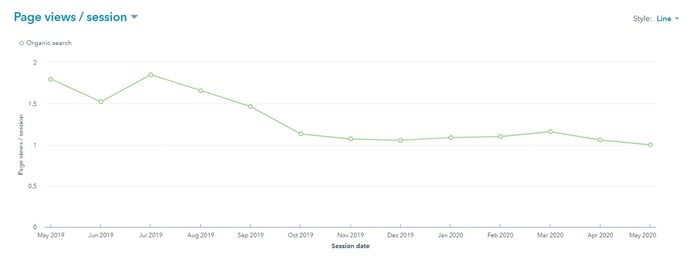

A common way a website measures the quality of its published material is the amount of time people spend on their pages and the number of shares their content receives on social media. You can learn more about building quality content here.

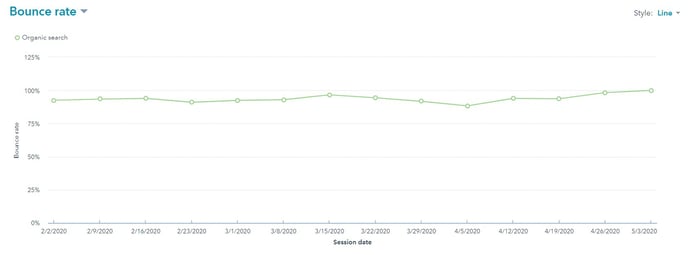

You want high-quality information on your website to give value to your readers, rank higher on search engines, increase the time users spend on your page(s), and lower the bounce rate.

When optimizing your content, your aim should be to answer any questions a user may have about the topic.

For example, if someone searches for “how to start meditating,” you ideally want your webpages to rank in the top search results. You want this person to click on your webpage, read it, take what they need from it, and do something else.

What you DO NOT want them to do is to click on your site, leave it within a few seconds of landing on it, and continue looking through other search results for information. What the user in our example is doing is a practice called “pogo-sticking," which is bad news for sites because it lets Google know that the first page (and the others that follow) did not answer their questions, which in Google’s eyes is a big thumbs down.

In November of 2011, Google released its freshness algorithm update, which at the time was estimated to impact around 35% of search queries. This meant that if the content your site was publishing fit into any one of the specified categories, and you wanted to maintain your SERP rankings, you needed to make some serious updates.

Updating past articles with new content and images can increase a site’s organic traffic by 106%. From an SEO standpoint, you want that for your website!

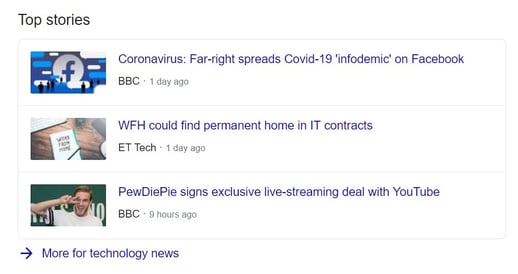

Within this category, we can find the latest trending topics that need the most up to date information. When you search for a recent event, you will likely see search results that were published within a few minutes to a few hours ago. Examples of this category are the Coronavirus pandemic and the state of the world economy.

Regularly occurring events can be anything from yearly sports tournaments to presidential elections –what’s important is that users expect the latest information on these topics.

Most website content is likely to fall within this category, which can include informational blogs ranging from the latest money-saving tips to web design trends.

Updating your content ensures anyone reading it will get the latest information, and lets others know you care about delivering the best and most current information available. Publishing current and relevant content will help bring in more web traffic and is more likely to encourage social media engagement than outdated or inaccurate content.

However, not every piece of content online will require an update. Articles that cover historical events will never need it. The closer a written account is to the historical development, the higher its likely to rank when compared to a more recent article written about the same event.

Evergreen content doesn’t require frequent updating because it never stops being relevant. Content of this type is sustainable because it continues to be relevant, regardless of when it was initially published, so web traffic is likely to increase over time.

How-to guides, how-to videos, beginner’s guides, articles that answer common questions, case studies, lists, checklists, statistics, and product reviews are all types of evergreen content.

Examples of B2B evergreen content include articles like: “How to use Google Analytics to track campaigns,” “What is marketing automation, and why do you need it,” “Top 15 SEO words and phrases you need to know”, and “SEO mobile marketing case studies.”

While this content stays relevant over time, it will require updates as information changes and new features are added. Doing so can prevent Google from ranking another business’s evergreen content above your own on SERPS.

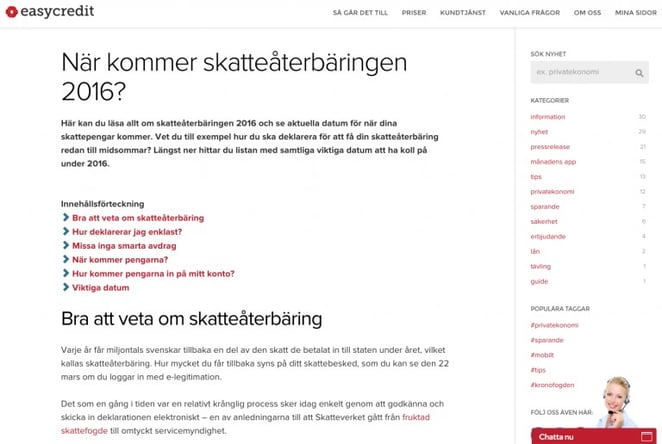

When designing an SEO friendly webpage, you need great content and a proper page structure.

As more websites improve their content marketing and provide their readers with in-depth answers to their pain points, the page structure on a site becomes a significant tiebreaker when ranking for more competitive keywords.

When deciding how to rank different pages for similar keywords, Google bases their search results on the answer to Do the keywords in this search query appear in the title, the headline, and the URL of a page?

A good content structure is one that adopts a logical flow of ideas; you achieve this naturally by using headers and subheaders and other HTML tagsTags meant to define headings in HTML and range from H1 (the most important) to H6 (the least important). to help readers understand the breakdown of your thoughts.

Using the H1 HTML tag in your article headline and including one or more of your targeted keywords will encourage users who just clicked on your page to stay on it.

Headings H2-H6 can and should be used to organize the rest of your content, making it easier for readers to scan the title of each heading and instantly know what each section is about without having to read the text.

Accurately citing your sources and including bullet points, lists, and images all work together to create more visually appealing and engaging material for others to read.

Other things you can do to improve your content structure include:

A heading is used to gravitate a reader’s attention. By adding keywords to them, you reiterate how relevant your article is to the reader’s search intent.

You can do this by emphasizing them through different formatting, fonts, or font color. Keep in mind that any emphasis you make should follow your brand’s design to look appealing.

The bottom line for the content structure is this: the more engaging your content, the longer a site visitor will likely stay on your website. This is an excellent thing – the average time per session on your site is a key ranking factor for Google.

A landing page is a single webpage meant for a specific marketing or advertising campaign. It is the page you are taken to after clicking on a link in an email, an ad, or a SERP (search engine results page).

As Seth Godin puts it, a landing page can have one of five intentions:

Landing pages are standard in Lead Capture, PPC, and Email Marketing efforts. They are meant to provide a “Welcome” message to visitors who’ve just landed on your site. At their baseline, they aim to create a positive user experience.

In general, Google algorithms and SEO strategy, prioritize creating excellent user experiences. It is for this reason that using landing pages for SEO makes sense.

You may be asking yourself, “but why would I want to optimize a landing page for SEO?”

The short answer is:

In reality, most of the web’s landing pages do not rank in the first search results on a SERP. The reason behind this is that competing webpages have A LOT higher word counts than most landing pages, this is how it is supposed to be.

Landing pages serve a specific purpose, to help website visitors convert. They are meant to get them to take a particular action, and they do this by using minimal text with a hint of visual appeal. If they were filled with endless text, they wouldn’t do a great job of increasing conversions.

Landing pages created for maximum SEO results need to focus on ranking instead of converting. While we ideally want our SEO-enhanced landing pages to accomplish both, the reality is that it is difficult but not impossible.

Let’s look at how you can tweak an existing landing page on your site for more significant landing page optimizationThe process of improving aspects of a landing page to increase conversions..

Optimize your URL, page title, and headers to reflect the keywords you’re targeting.

Including useful content is a crucial distinction between a conversion and an SEO-focused landing page. To get your landing page to rank, you will need lots of helpful content that can also appeal to other sites for link building.

For example, you recently published a landing page meant to promote a holiday discount your company is offering on business management software. To optimize this landing page for SEO, you will need to include a case study on how others have improved their business’s overall customer experienceHow a client feels was their overall experience with your business, this includes what they think about your brand. using this software. You will also want to earn links to other pages that recommend your software or from other sites that write content related to customer acquisition.

A landing page wouldn’t be one without a good CTA that encourages users to take a specific action. You want Google to rank your page for a search that’s relevant to the product or service your landing page is promoting. Help your target audience by telling them what you would like them to do.

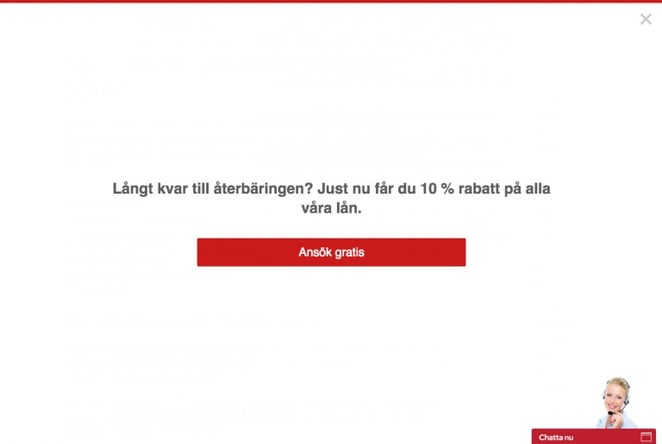

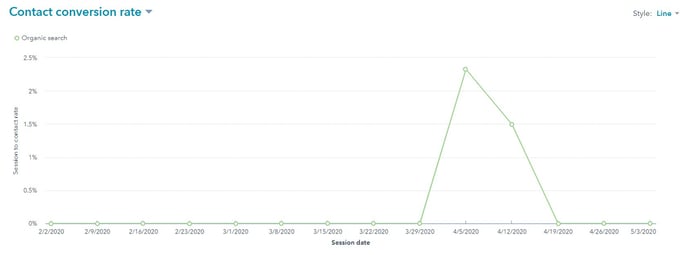

Conversion rate optimization (CRO) refers to the process of increasing the percentage of site visitors who convert. This process involves determining how individuals navigate through your site, which pages peak their interest, and what is stopping them from completing your conversion goals.

Before we get into the finer details, let’s recall that a conversion is the desired action that a business wants prospects to take.

Every business and every marketing campaign will have a different idea of what a conversion is to them. For example, a conversion for a B2B software company could be that visitors to their site sign up for a free demo. Once a certain percentage of these individuals sign up and try the software demo, another conversion goal could be to persuade them to purchase an annual subscription for this software.

These two very different conversion goals follow a specific order – one cannot be achieved without doing the other first. You cannot get people to purchase your software if they know nothing about you or how your software.

There are two types of conversions.

Macro-conversions that include:

Micro-conversions such as:

Before a macro conversion can take place, a micro-conversion needs to happen, which is the case in our example and many real-life situations.

Conversions are essential because a website receives only a finite amount of web traffic. Smart businesses invest their marketing budgets in making the most of their web traffic by increasing their conversion rates; that way, they can spend less on advertising and receive the same benefits.

A conversion rate is the number of times one site visitor completes a conversion goal (i.e., creating an account) divided by your site’s traffic.

conversion rate = total number of conversions / total number of sessions * 100

Let’s use the example of the B2B software business. Let’s imagine its website receives 150,000 different visitors in one month. Within this month, 3,000 different people decided to sign up for a free software demo.

conversion rate = 3,000/ 150,000 * 100

conversion rate = 2%

In this example, the conversion rate comes out to 2%. Considering that the average conversion rate for new B2B websites is 3%, the marketing campaign for the business in our example will likely need some optimization.

Keeping track of your site’s conversion rate will help you determine how well your marketing campaigns, your webpages (including landing pages), and apps (if applicable) are performing.

When your marketing campaigns consistently achieve healthy conversion rates and increase over time, a smart move is to start considering how you can optimize your conversion rates.

To start optimizing your conversion rates, consider which marketing campaigns would benefit the most from optimization. Improving a campaign with a 0.5% conversion rate might not give the same return as improving one with a 1% conversion rate. Experts suggest ranking your campaigns based on its potential, its importance, and the ease with which you could optimize them.

To help you choose which campaigns are worth optimizing, your marketing team can answer questions like:

Here are some things you can do to improve your conversion campaigns:

Include landing pages after blogs

Landing pages serve as a platform for websites to ask site visitors for more information.

Writing compelling copy that engages readers and including a landing page pop up ad is a winning combination. For best results, run split tests to get the most from this combination. You can test website copy, images, form questions, the number of form fields, page design, calls-to-action (on your blog post and landing page), and your content offer.

Use video for greater engagement

A HubSpot study from 2017 determined that an average of 54% of consumers would prefer to see brands use more videos in their content. Video content that’s entertaining, informative, and funny tends to perform well with audiences.

Videos also provide brands with the opportunity to make their value proposition evident without coming off as pushy or salesy – two attributes that often put consumers off. Videos do not have to be lengthy. The average attention span for audiences is 9 seconds. When a video’s script and the visuals it uses, be it animation, client testimonials, or authentic footage, are well put together, 9 seconds is enough.

Using video on a landing page can help prospects learn about your brand, persuade them to buy, and entertain them. Not only that, but including videos on landing pages has the potential to boost your conversion rates by an astounding 80%.

Every website needs some flair to contrast against blocks of text.

Images and videos make the content on a page more exciting and easier on the eyes. They help to retain a visitor’s attention, reinforce the concepts your written content addresses and positively influence your SEO efforts by increasing engagement levels.

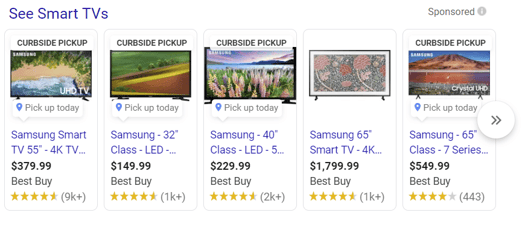

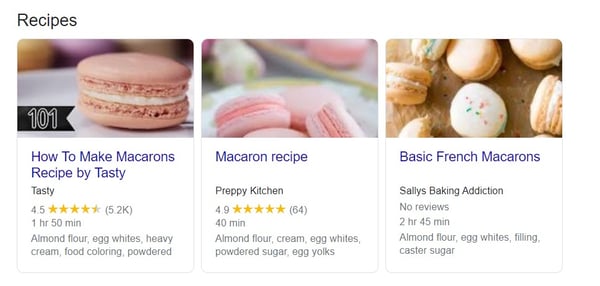

Optimizing the images and video you include on a webpage can do much more than making it look good. When someone searches for a keyword or a topic relevant to an image or video on your page, the possibility of it featuring in the “images” or “videos” part on Google is open.

While most people may stick to browsing through images, others could have an interest in looking at the web page's origin. As for videos, someone may find the information in it very helpful or insightful and want to take a look at the website behind it.

The amount of exposure a single image or a video embedded on your webpages receives can vary. However, to build a solid SEO strategy for maximum organic search traffic, there are a couple of things you can do to help make this happen.

This first piece of advice is exclusive to images.

Adding an image or images that are relevant to your content is a no brainer. It needs to be high quality, meaning that the photo’s resolution is clear, and the pixels are not visible. You can find an extensive explanation about the image resolution for your pictures to look their best here.

If you have the resources, most of your images can be captured using your professional equipment. If that’s not within your budget, there are plenty of sources online.

While most pictures you will find on Google images are subject to copyright, there are sites where you can purchase custom made images. You can check out these great options: 500 px, Shutterstock, and Adobe Stock.

If that is not an option for you either, some websites provide free, high-quality stock photos. These are great stock photo resources you can check out: Pik Wizard, Pixabay, and Unsplash.

Captions create a connection between an image and its accompanying copy text.

Captions are commonly placed under an image because they are meant to guide a reader; they help them discern the image’s connection with text. Not only that, but they also help search engine crawlers understand more about the picture.

Most visitors scan a page before deciding if it is worth reading. Image captions are one of the criteria they use—quick fact: captions are read 300% more times than the actual copy.

An image’s file name should be a description of the image itself.

Alt-text is an HTML tag that describes the appearance and function of an image on a page. It helps Image SEO because its concise description of the picture makes it easier for crawlers to determine the content of the image.

Make sure both the words in the file name and alt text are separated by hyphens and not spaces.

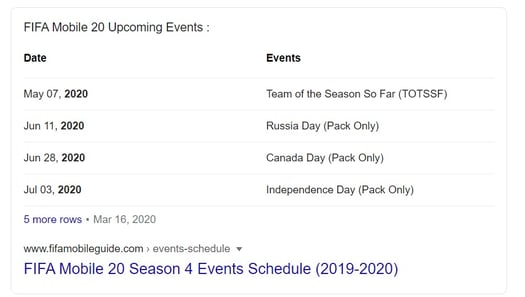

Schema markup is the language of search engines that gives them the rich snippets you see when you search for your favorite chocolate chip cookie recipe. The advantage of implementing schema markup to your images and video is that these rank higher on search results than the number one ranked webpage for a given search!

This handy feature works best for webpages featuring video, product images, recipes, movies, or information about a real or fictional person, name, address, phone number, and rating of a business.

If your website produces videos and you need a bigger audience, you should reconsider where you’re uploading them. Consider uploading your videos on video platforms with a broader audience such as YouTube or Vimeo in addition to featuring them on your site. If big companies like HubSpot and Moz upload theirs on other platforms, why shouldn’t you?

Videos with captions repeatedly have higher engagement rates. They also tend to get about 40% more views, and audiences get an additional stimulus that can help bring home the video’s message.

While captions are an excellent addition to your video, you can also opt for sharing a transcript of it. Some people may not be too keen on listening to a video and reading captions at the same time. But, they may want to refer to its transcript at a later time.

Not only that, but video transcripts help to boost your SEO efforts. The reason: for crawlers, it is easier to understand text and index it accordingly. Google crawlers are software, after all!

We all know that a good ol’ catchy title can be all we need to click on a video and watch it. This is true for videos on your personal YouTube account, and it holds for businesses and individuals seeking further education in your subject of expertise.

Make sure you put together a concise meta description that includes some of the keywords you’re targeting and does an excellent job of summarizing in a sentence the main idea of your video.

When it comes to videos (and YouTube), video thumbnails are everything. We live in a visual world, and if a thumbnail looks fresh, exciting, or can capture the essence of a video’s message, it will receive many views. However, this is mostly true for videos with a more casual tone.

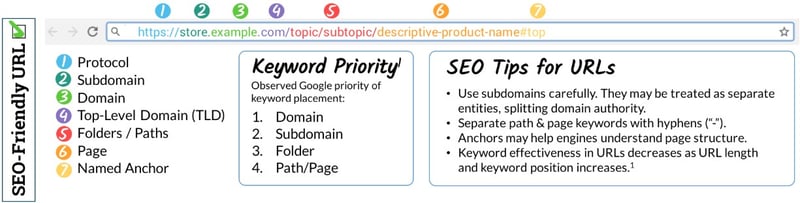

A page’s URL is its web address, that is, where it is found on the Internet. It is where crawlers and users alike can go to see what they need about your business. The URL also tells Internet servers how to retrieve your webpage and show it on a user’s computer or mobile screen.

For this reason, every website that wants higher search engine real estate needs to align their URLs in the right conditions to ensure Google can access it and serve it on SERPS.

The construction of a proper URL can do a lot for your overall SEO strategy.

From the onset, users and search engines get some insight about the content available under the URL.

While URLs themselves are not a ranking factor, the keywords used in a URL are. This only makes sense when a keyword used in a URL is part of the article’s title.

A well-written URL can serve as its anchor text, which comes in handy for users in two situations:

(Source: Moz)

URLs that follow this structure are more successful in search engines because they provide the pathway Google crawlers need. Every search engine result contains a webpage URL.

(Source: Moz)

The protocol refers to the HyperText Transfer Protocol process.

The subdomain is the third level of the domain hierarchy and is found before the root domain and separated from the domain name with a period. For most websites, the subdomain is the “www” part of a web address.

The domain refers to the unique name of a website. For example, www.theiamarketing.com

The top-level domain (TLD) refers to the suffix that appears after the domain name. Examples of this include:

The top-level domain is the highest level of the hierarchy system that is the domain name systemAn information system that translates a domain name such as “cnn.com” into IP addresses so browsers can load Internet resources. (DNS).

The folders and page portions of a URL referring to the location of a webpage within a website.

Let’s take a look at two different examples.

A bad example of URL structure:

https://www.theiamarketing.com/guide/blog/232349

A good example of URL structure:

https://www.theiamarketing.com/guide/blog/creating-the-perfect-blogpost-with-hubspot-crm

The first URL does not provide users with the information they need to predict whether the URL they click on can provide the answers they want. However, the second example shows the pathway that a link follows within the site. The article “Creating the perfect blogpost with HubSpot” is found within the blog section, located in a section called “guide.” We can decipher the name of the blogpost as each keyword is separated by strings of text called slugs at the end of the URL, making it easier for Google to understand what is the central topic of the post.

Every webpage on your site needs a purpose. Whether it is to give information, entertain, or sell a service, you want its target audience to see it. For this reason, crawlers must discover it. You can help by including terms that describe the page’s subject. More often than not, this incorporates your targeted keywords.

A correctly structured URL will allow both crawlers and users to see a “breakdown” of where the webpage is located within your site. Let’s use this example:

https://www.theiamarketing.com/guide/blog/creating-the-perfect-blogpost-with-hubspot-crm

Using this URL, we know the website we will be accessing is called Theia Marketing. Its blog is located within the “guide” section, and the blog post, found under “blog” is likely about creating a perfect blog post using HubSpot’s CRM. Separating words using a hyphen (-) makes each keyword visible to site visitors.

A well-structured web address allows the user to see a logical flow from domain to category to sub-category to product. The second URL goes from domain to product.

It also gives the user insight into what they’ll find on the page. Coordinating this with your article headline/title will leave no room for doubt about the content’s subject matter.

Keeping URLs relevant and straightforward is the secret to getting both humans and search engines to understand them. Although some URLs do include ID numbers and codes, SEO best practices dictate it is more useful when it is to use words that people can comprehend.

A word of advice: avoid using uppercase letters in your URL because it can cause issues related to duplicate pages. For example, www.theiamarketing.com/guide/blog and www.theiamarketing.com/guide/Blog.

Duplicate pages are something you want to avoid. When faced with two or more pages with similar content, Google bots have to choose which one to rank over the other. A situation like this can work against your best SEO efforts and may lead to lower ranking for all of your webpage(s).

Just as essential as building webpages that look good for users and search engines alike, is improving the “behind the scenes” aspects of SEO. These include all of the elements that play an active role in making your page worthwhile of high SERP rankings and sustainable website traffic.

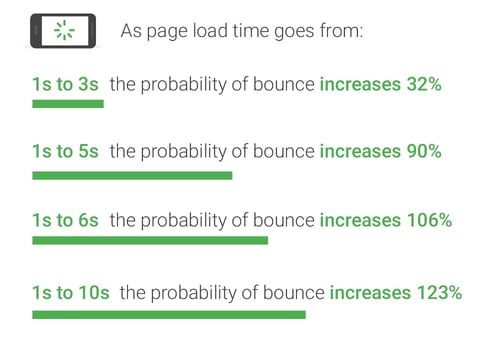

User experience refers to how the design of a website helps determine a user’s experience while on your site. It includes the person’s subjective feelings about your site, including how easy to use and engaging it is. Good user experience is vital for your site’s readers and directly affects your site’s overall traffic and engagement rates.

Consider your website as the backbone of your marketing strategy, 86.6% of small and medium-sized businesses in the U.S. said their website is their most important digital marketing asset.

So, creating a good user experience on your website is essential to all forms of marketing, but it is especially important when using contextual marketing.

Considering that today’s digital marketing is all about the customer, everything about your website needs to tailor to your target audience.

Your only goal shouldn’t be to rank higher on SERPS and have users visit your website. Your final objective should be to have them stay on your site for as long as possible, get familiar with your brand, and become paying customers.

Questions every SEO professional should ask when deciding how to improve their site’s UX include:

Without a concrete answer to these questions, creating a UX optimization strategy that fits your current needs can be a challenge.

Responsive web design is a web design strategy that allows websites and web pages to display on all devices and screen sizes automatically.

It uses a combination of CSS settings to adjust the style properties according to the screen size, the orientation of the device (such as tilting your smartphone or tablet), and image resolution.

Why do you need a responsive web design? The obvious answer is that it makes it easier for users to navigate on your website from any device. It also means your site is mobile-friendly, so visitors will want to spend more time on it, and search engines are inclined to consider it a quality website.

Responsive design aims to avoid the annoying task of resizing or zooming in on a site because of it from awkwardly displays on a screen. Websites that require these adjustments tend to have high bounce rates and are virtually inexistent on SERPS.

So how can you tell if your site or any other site is responsive? There are two different ways you can go about this: you can visit the same webpage on all your devices and check to see if the images, resolution, and size of all the website’s elements adjust to your screen size.

The other method is to do the following:

To build a website for optimal UX power, you have to know what you’re aiming to accomplish. This includes the following:

We have included sections throughout this page that exclusively focus on each of these aspects. All of these elements, when adequately enhanced, work together to provide the best UX for your site visitors.

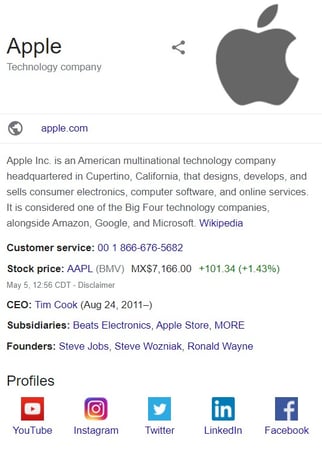

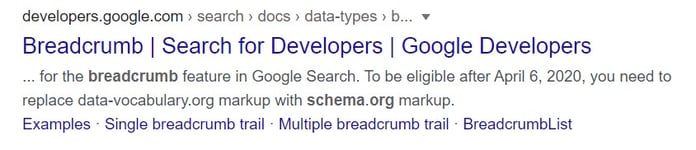

Meta tags are pieces of text used to describe a page’s content. The “meta” stands for “metadata,” the kind of data these tags provide – data about the data on your page. The information these tags provide is called microdata.

They are essential for SEO purposes because these short content descriptions help search engine crawlers determine the topic of a webpage. This data is used to display snippets of a webpage on search results and for ranking purposes.

Examples of common meta tags include:

<title> and <description elements>

Part of using meta tag optimization is to give your content a “leg up” using your page’s HTML code. Because Google bots sift through thousands of webpages at a time, you need to specify which information you want them to crawl and index. They’ll consider this data when ranking all available content that’s relevant to your targeted keywords.

While their purpose is an important one, they are not visible on the webpage itself, only in a page’s source code. To access a page’s source code, right-click on any part of the page and click on “View on page source.”

The difference between tags you can see (like on a blog post) and those you cannot see is where they are placed. Meta tags, which provide the information called “metadata,” are found in a webpage’s HTML and are only visible to search engines and web crawlers.

There are approximately 20+ tags you can use to enhance your website; however, to have a necessary foundation on metatags, you only really need to know about a few of them. If you’re interested in learning about all the different kinds, you can read here.

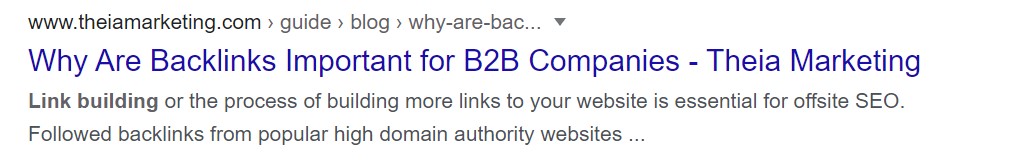

The meta title tag, <title>, is located in the header and is the most important meta tag of all. Every webpage on your site should have a different title tag that describes the page. It indicates to Google crawlers and users the title of a blog post.

The end goal of every meta description tag is to convince the user to click on the link. While microdata refers to information about a webpage’s content, the title tag acts as the “clickable link” that gives a search result in its title.

The meta title is useful when shuffling through multiple open tabs on your web browser; it helps you to identify which tab corresponds to the content on a web page.

The meta description tag, <description element>, summarizes the content on a webpage so that bots know how to index the page, and humans can make an educated decision before clicking on it.

While you should put some time into writing a very brief one- to two-sentence description of the content, keep in mind that Google will sometimes pull up a snippet of it from your page if it considers it more relevant to the search query.

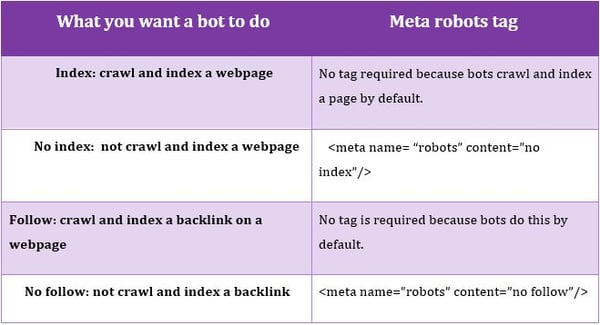

The meta robots tag, <meta name=”robots”>, tells search engines if and how they should crawl your webpages.

The default configuration of a webpage is to be crawled and indexed by a bot. In this case, a meta robots tag is not necessary. However, if you do not want your page crawled and indexed, use this tag.

Let’s look at a breakdown of this:

Key reminder: The use of the above meta robots tags should be restricted only when you want to limit the way Google crawls a page.

Alt text, short for alternative text, is a type of tag used in HTML that describes the appearance and function of an image on a webpage.

Images play a pivotal role in the quality level and engagement that a webpage’s content receives. There are various reasons why you need to use it when building your SEO-friendly webpages.

This is especially true when a site is slow in uploading. The alt text will help a user by providing some context on what an image is before it fully loads.

Google crawlers are created to scan through text-based content but are not programmed to scan an image and determine its subject matter. Therefore, an explicit and concise description of the pictures and graphics on your page helps these bots do their job.

Have you noticed that Google Image SearchA Google search feature that retrieves content-based images for a search query. displays images from hundreds of different sites that are relevant to a search you have just performed? Google Images uses the alt text descriptions from all the websites bots have indexed to retrieve the most relevant images for a search.

The alt-text is automatically written into the HTML code of a webpage. This is what the alt tag will look like in the source code of a webpage:

(Source: HubSpot)

Alt text should be specific and descriptive of an image and should also consider its context.

Let’s use the following image to compare a bad and good alt text description.

Bad example: “Illustration of a woman with headset”.

Bad example: “Illustration of a woman with headset”.

This alt text description is decent because it conveys what a user sees. However, the description could be more specific – you want Google bots to associate the image with a section or sections of an article.

Good example: “Illustration of a customer support agent with headset and laptop”.

The alt text description above explains the context of the images in more detail.

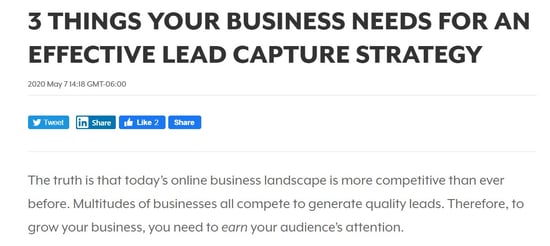

Many of today’s top businesses have grown and extended their customer base by using social media. That said, if you want a greater outreach, make it easy for anyone who reads your content online to share it with others on social networks.

Here are two things you can do to make this happen.

While the itch to include social sharing buttons to every major social network is big, do not do it. You do not want to give your audience too many options and risk your content not gaining momentum on any specific network.

Most marketers opt to include social sharing buttons to the networks they know their customer base frequents.

For example, if you write for an engineering firm, your target audience is likely to be present on social platforms like LinkedIn, Facebook, or Twitter. Strategically placing social sharing buttons on your webpage that allow readers to share your material will work better than if you share on Instagram.

2. Create short and descriptive URLsThe goal is to create a URL that makes it easy for anyone to know what the webpage is addressing.

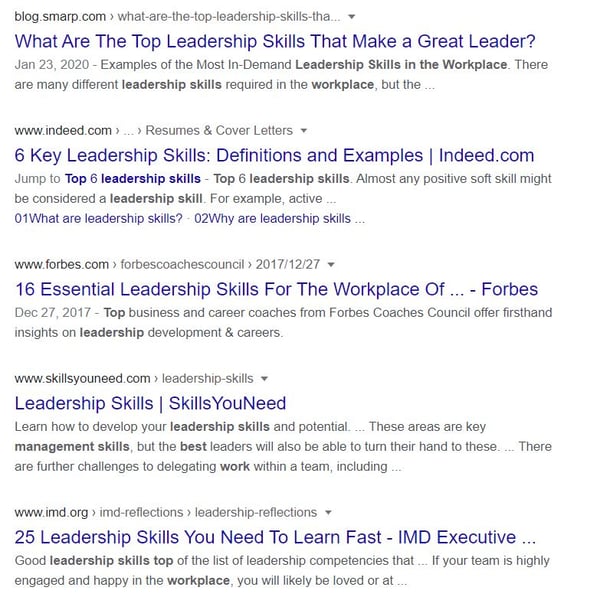

Domain authority is a metric developed by the SEO software company, Moz. Businesses should be paying attention to their site’s domain authority because it plays a vital role in the overall SEO success of any website.

Domain authority is not a Google ranking factor and will not impact a site’s ranking positionA metric used by Google to determine the relevance of a webpage.. However, it predicts the likelihood a website will rank on a SERP based on how well it has been optimized for SEO and the number of backlinks it has.

It is measured on a scale from 0 to 100, 100 is most likely to rank in the first SERPs.

Generally speaking, a website can raise its authority on the web by:

Multiple factors influence which sites are considered authoritative in their industry. Ranking among the first ten spots of a Google search is one of them.

How do search engines determine the websites with authority? While the term “domain authority” does not exist for Google, part of creating quality content is having this content be authoritative. It also includes sites where:

The number and quality of backlinks your webpages earn is Google’s most reliable indication of authority, and they directly impact a page’s ranking.

Indeed, Google wants to give users search results that are authoritative and relevant to what they are looking for. Therefore, your content should match specific search queries particularly well.

While a Moz domain authority score will not impact your SERP ranking, it will give you an insight into the probabilities of ranking high in that particular moment. If your score is lacking and you are not ranking as well as you’d like on Google, you can do the following:

Establishing your website’s authority may take time, but it is the key to succeeding at SEO and reaping its many benefits.

Let’s look at an example of how an organization can start building content authority.

A business named 3D Designs is known for its printed prosthetics that it delivers to children who need them. Printing these prosthetics is only one of many things that they 3D print. They want to establish their authority on 3D printing and increase their search engine rankings. They can achieve these goals by creating as much content as they can on 3D printing, comparing their content with any of their direct competitors’, and target a set of keywords in every piece of their written content. They can also ask their clients to write about them and link to their site.

When search engine bots proceed to crawl and index their website, there will be no room for doubt that 3D Designs specializes in 3D printing, in prosthetic 3D printing, and is considered an authority in those topics by others.

Much like domain authorityA search engine ranking score that predicts how well a website will rank on search engine results pages (SERPS). It is also known as domain authority and was created by Moz, an SEO software company., page authority is a score created by the SEO software giant, Moz.

Unlike domain authority, page authority seeks to predict how well a specific webpage could rank on the first search engine results pages. Its score ranges from 0 to 100, 100 as most likely to rank, and 0 the least likely.

The lower your initial page authority score is (say, 20-30), the easier it can be to raise your score. However, pages with higher scores (70-80) may find it more challenging to improve due to the higher quality of competitor websites.

Domain and page authorities are based on information from Moz’s web index and are measured using machine learning that compares a website’s or web page's performance against thousands of SERPs. Moz does not consider factors like keyword use or content optimization when calculating page authority, but it does evaluate the number of links that point to a specific site or webpage.

Much like with domain authority, the best thing an SEO professional can do is to increase the number of quality links that redirect to their webpages.

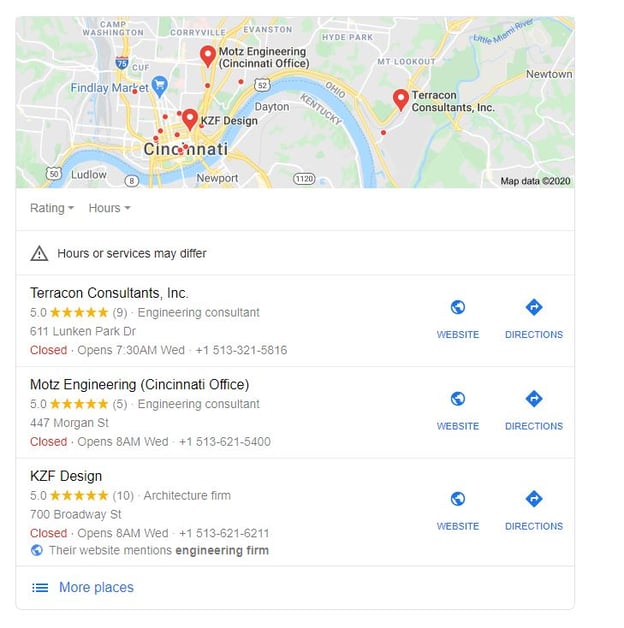

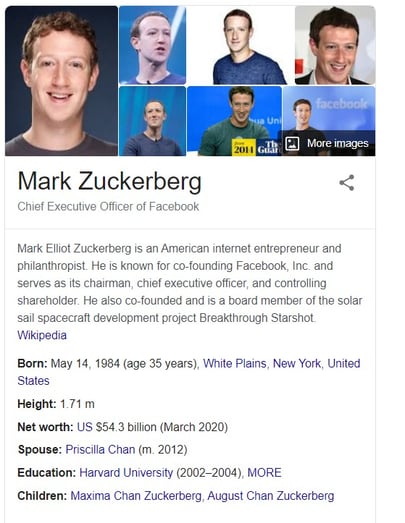

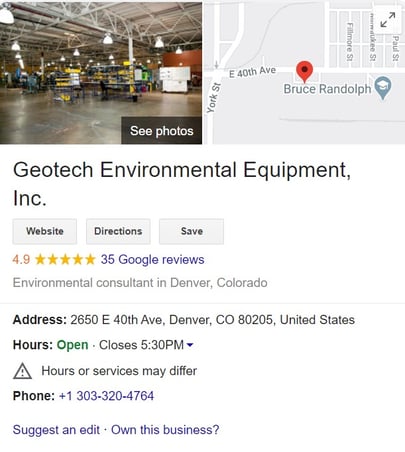

Local SEO, a subcategory of search engine optimization (SEO), is a strategy that helps local businesses show up on local search results. Considering that 46% of all Google searches are on the lookout for local business information, every company with a physical location does well in investing in local SEO.

It also consists of optimizing a business’s online presence to attract more traffic from local search results.

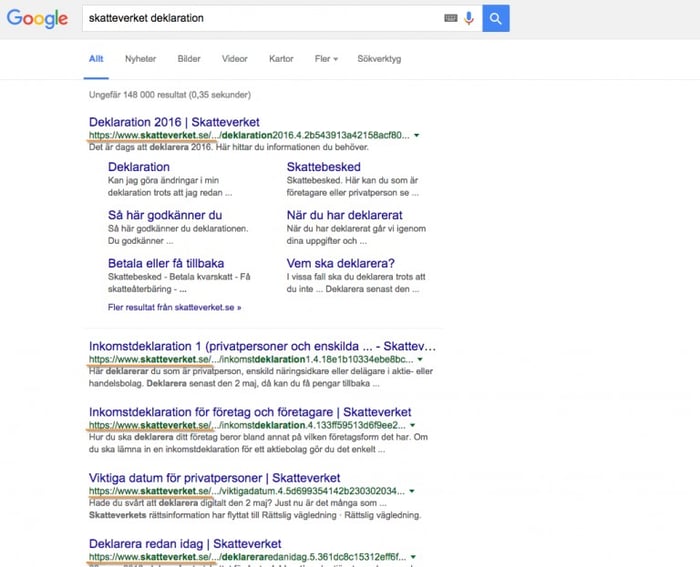

When performing a Google search for “bakery near me,” you will get two types of results: organic search results and “snack pack” results.

The organic results are those we aim to rank high by implementing SEO strategies.

The “snack pack” search results, also called the Google 3-pack, consists of the top three business listings around a user’s current location that match their keyword search. This is what you want to optimize if you want your local business to land on the coveted three-pack.

Since an estimated 92% of users will choose to purchase from a company located on the first page (first three spots) of local search results, you too should want your business featured there.

Mobile use is inherently connected with local searches because users looking for local information will almost always use a mobile device. Not surprisingly, 46% of all Google searches are looking for local information. In addition to this,

As Google continues to make mobile search important for local search rankings, it becomes just as important to optimize your business's online presence for more significant Local SEO results.

There are multiples things a local business can do to increase their chances of ranking among the top three search results for local competitor businesses.

If you run an engineering firm, this will include brainstorming all of the search queries that someone could use on a search engine if they are looking for an engineering firm. For example:

If you have a hard time brainstorming the different combinations of keyword searches your customer base could use, Moz Keyword Explorer and Ahref’s Keyword Explorer are good options. They are also great allies for analyzing the keywords your direct competitors are using to rank.

Another idea is using Google’s autocomplete feature on its page can also provide you with more insightful keyword options.

By claiming and completing your Google My Business account, you make sure that Google has the most up to date information on your business. It is also pivotal to more significant local traffic because visitors will now have a website and social media profiles they can use to contact you.

In addition to updating your business information on your Google My Business account, keeping it active will ensure local search results include your business. Keeping your account active includes:

Claiming your business citations on every major directory listing for your business is smart. Making sure each profile features the latest NAPEssential business information that should be available online for the public to see. An up-to-date NAP will help organizations rank better on local organic search results. is even smarter.

Making sure your citations on these listings are correct is a top-ranking factor for Local SEO because consistent NAP helps Google crawlers verify a business exists and feeds the information it may have of it from across the Internet.

On top of this, consistent listing information helps potential customers know of your business and can help drive your sales and revenue.

Remember that link building is one of the most significant factors influencing a website’s rank among search engine SERPS. This rule also applies to Local SEO.

For a local business, building links across the web that redirect back to your site can help up your Local SEO game. Three different situations can apply for a local business:

No links- For small and hyper-local business, keeping your Google My Business profile up to date, business citations, and optimizing their page may be enough.

Location-specific links- Credible links from sources in your area, they come from the local news, non-profits, or a fundraiser you have organized.

Industry-specific links- Links from sites relevant to your industry (including any guest blogging) are good options.

Staying active on social media lets local businesses stay in contact with their potential customers. Social media platforms come with the bonus that their customer base can learn about special offers, events, or news related to the organization.

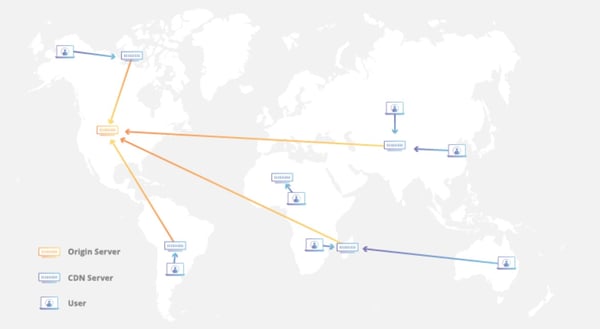

While on-page SEO emphasizes all of the elements that go into constructing a good SEO website that users can enjoy navigating, off-page SEO focuses on all of the efforts a business makes to build backlinks and establish their authority from other sources.

It works to strengthen and influence the relationships your website builds with other sites across the web. It includes strategies a business can apply to help search engines view your website as a reliable, trusted source, and worthy of ranking within the first SERP.

Throughout this section, you will notice that most of this content relates to building a volume of high-quality links.

Per Moz, link building is the process of acquiring hyperlinks from other websites to your own.

As we know, a hyperlink is a link that can be identified by its URLThe name of a web address.. Links are how a user gets from one webpage to the next.

The solution to a successful SEO strategy is creating a delicate balance between three things:

When a Google crawler scans through a webpage and sees another link, it will “crawl” onto that link and scan it as well. This means they will crawl the links on your website, and they’ll crawl the links on other websites that you refer to. The more times they crawl a site or individual webpage because other sites direct to it, the more these crawlers will believe that page is authoritative and relevant for its subject matter.

However, creating great content on your website is not enough to rank high on search engines. The truth is that Google cares how interesting and helpful other people think your content is. In SEO, this means a higher number of links that point to your website.

Link building was not always as it is today, so how did it get to be this way?

When Google was first introduced, popular search engines at the time, like Yahoo and Alta Vista, ranked their search results based on the content of a page. Webpages had to contain links from other sites, but it did not matter if a website was “high-quality” or “low-quality,” they all carried the same weight.

Later on, Google became a major player because it changed the way it evaluated links. It scanned the content on webpages and looked at how many people linked back to it. This was the beginning of the PageRank algorithm, which viewed link building as a “ vote of confidence” that webpages gave to other pages they linked to.

Once websites caught on, many of them started linking to other sites and receiving links. Many sites considered link building as a way to repay a favor, and others sold links to domains willing to pay for them.

With its Penguin update, Google enforced link building and required sites to focus on the quality and the number of its links.

Link building is all about links. We previously looked at a breakdown of the elements that comprise a URL.

In this section, we will classify the different parts of a link. When a link is placed in the HTML code of a webpage, it will look like this:

(Source: Moz)

Let’s look at each element individually.

The <a of a link tag is also called an anchor text. Its purpose is to begin the link tag and informs search engines a link will follow.

The </a> part of the tag ends the link tag, and let’s search engines know the link ends there.

Href is short for hypertext referenceAn HTML code used to create a link to another page.. The link within the quotation marks (“) is the URL the reader will be redirected to once they click on the hyperlink. While URL usually redirects to a webpage, it can also be an image, a pdf, or a file to download.

The anchor text refers to the short amount of text that you will see on a page for a clickable link. This text is usually underlined and is blue.

Using what we already know about the interworkings of crawlers scanning one page to the next, we can conclude that search engines do two things with the links they read on a webpage:

With its newfound knowledge from these webpages, crawlers then index the information. Every search query performed will serve a user the most relevant results from Google’s index, not the Internet.

When it comes to link building, Google will ask the following questions to determine how to rank a webpage:

The words linking building and backlinks are often used when referring to the efforts a website makes to have other websites refer back to it.

To avoid confusion, it is essential to establish the difference between the two terms.

Link building is a process; it works to acquire hyperlinks from other websites to your own. A backlink can be one of two things:

A hyperlink (commonly known as a link) provides a way for users to navigate between webpages within the same site or different sites. Search engines use links to crawl the Internet; they will crawl the hyperlinks between each page on your website, and they will crawl the links between entire websites.

We know that link building is a process in which Website 1 works to get Websites 2, 3, and 4 to link back to it.

There are many things that sites can do to have other websites link to them.

In this post-Penguin age, every effort taken to get more links to your site should aim at creating natural linksBacklinks that happen organically. They are used to refer to a credible website, a piece of content, or a source., that is, when other sites link to your content because they think it is useful and adds value to their readers.

It is here where we can refer to link building as link earning. When done right, link building and link earning are the same things.

Before the Penguin algorithm update, Google faced issues with websites that traded and sold links. Many of these links led to low-quality pages and did not provide any value to readers, but were still rewarded with high rankings because these practices were “technically allowed.”

After Penguin, Google began prioritizing high-quality websites that receive earn links from quality sites.

The premise of link building is creating content that other sites in your industry find it necessary to endorse your material to, 1) provide value to their readers, and 2) provide a valid resource that backs up their claims.

How does Google know which sites fulfill these requirements? It uses a site’s link profile to determine this.

A link profile is an overview of all the inbound links your website has earned. It measures:

This points to the diversity in the links that endorse your website. It lets Google know if a variety of sites keep linking back to the same URL or if it is just a handful of domains that keep linking back hundreds of times. The latter case could raise suspicions of spammy linking.

You’re going to want a diversity of websites to link back to you. Why? Because building links from the same repertoire of sites will have a lesser impact on the quality of your link building over time. This is why it is crucial to building good relationships with other websites in your industry.

Google rewards webpages that link to trusted sources and earn links from other authoritative pages.

When a website writes its content and mentions a business or organization, it will likely link to a specific page on the organization’s website that addresses the same topic. However, there are times when a writer will instead link to the organization’s homepage.

While this minute detail seems harmless, it should be avoided because doing so makes it difficult for individual webpages to attain high rankings. The reason behind this is that it is difficult for a single page to generate link equity and depends on external and internal links to boost its SERP rank.

To help with this issue, make sure you’re highlighting any articles or blog posts that are not getting enough web traffic through internal linking.

Backlinks are not created equal – those that come from high-quality websites tend to influence a webpage’s rank position more than those from low-quality sites.

Deep-link ratio is the percentage of all of your backlinks that point to other pages other than your homepage. It is another factor that distinguishes the difference in backlink value. Google prefers when sites link to inner pages rather than a homepage. The logic behind that suggests that links are a kind of endorsement that a particular page is essential. Links to a homepage do not endorse a page; they endorse a website.

Curious to learn which sites use inbound links to refer back to yours? Use Moz’s Link Explorer to find out and start building a healthy link profile.

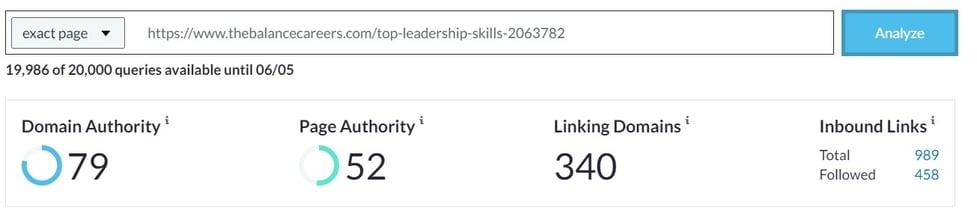

Now that we know the importance of linking on a webpage and the positive effect of high-quality links on a page’s SEO ranking, the question to ask is: How many links do you need to rank on page one?

The reality is that there is no set number.

However, there is something you can do to find out how many links you need for a page to be competitive. You do need to have around the same links as those pages featured on the first SERP. Having as many links as they do will provide you with a substantial baseline to target for how many links you will need to also rank on top.

So how can you go about this?

These topics should be broad enough to have many longtail variations that users can search—for example, playground manufacturing.

You will want to open each page up and run it through a link checking tool. Moz Link Explorer is a great tool that will tell you how many linking domains point to that URL. Once you know the average number of domains that link to those sites, you will have an idea of how many links you will need to compete with the results on the first page of a SERP.

If you’re trying to compete with webpages featured on the first page of a search results page, then you will need to write content that includes an average of X number of links.

2. Determine the average number of linking domains that point to these webpages.

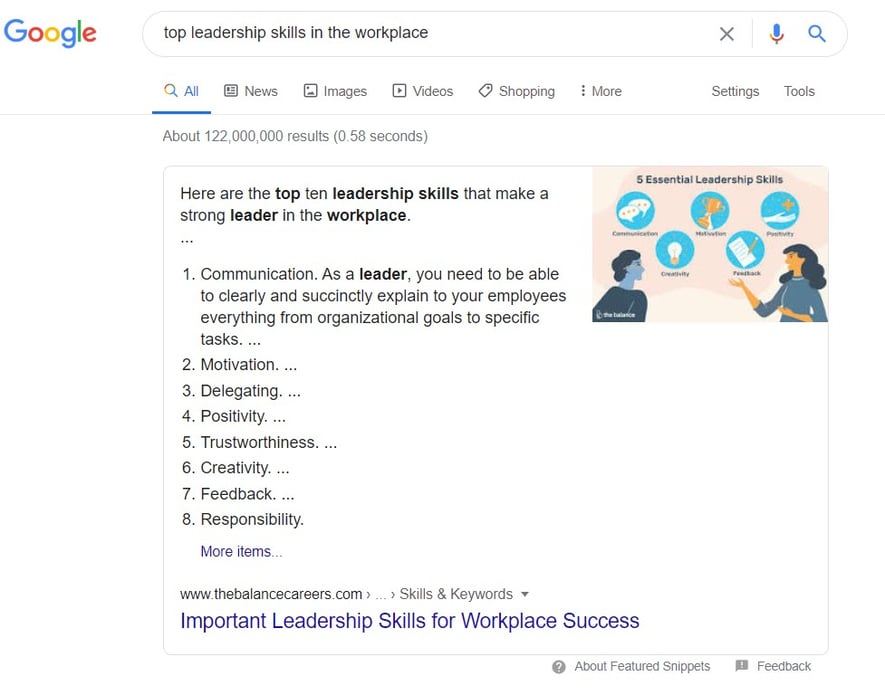

To rank within the top 10 search results for the keywords “top leadership skills in the workplace,” you would have to earn an average of 106 links to your webpage. The average number of links you need to rank in the first SERP will depend on how competitive the search is. For this reason, it's important to choose less competitive keywords to rank for.

Now that you know how many links you need on your website to compete with other first page contenders, you may be wondering, How do I know which content should contain all these links?

Again, you will have to look at the first ten results on the SERP for this answer.